Autonomous vehicles require the ability to react to conditions as fast and as accurately as a human in the same situation. Machine learning and artificial intelligence are being explored to make this happen, but each requires extensive computer power, immediately available.

As with many new technologies, there are issues beyond the adoption by OEMs (original-equipment manufacturers). One is regulation and the current status regarding autonomous vehicles can be summed up in one word: confusing. With few policies at the state and federal level regarding automated vehicles, the AASHTO (American Assn. of State Highway and Transportation Officials) wants to see a uniform national policy to avoid a patchwork of state policies, which is what is happening now. Currently, only certain states allow testing and deployment of automated vehicles. Those automated vehicle policies vary state to state, making the preparation for interstate travel by autonomous vehicles difficult to plan.

Funding for both digital and physical infrastructure that improves safety and advances technologies will also be needed. Connected and automated vehicles are in the near future, which means the technology—V2V (vehicle-to-vehicle), V2I (vehicle-to-infrastructure), V2X (vehicle-to-everything)—that uses the existing wireless and cellular networks will demand extensive build-out of that infrastructure.

To ensure the safety and efficiency of these technologies, AASHTO strongly supports the preservation of the 5.9 GHz spectrum exclusively for vehicle technologies. However, the association and the FCC (Federal Communications Commissions) have been in a battle over rights for that spectrum.

While fully self-driving cars may still be in the future, the impact of automation is already being felt as human drivers are being given more technology to employ to make their decisions better.

Automotive safety features that would have been considered premium add-ons in the past—such as adaptive cruise control or blind-spot monitoring—are becoming standard as ADAS (advanced driver-assistance systems) technology becomes more affordable to implement in vehicles and becomes mainstream in more models.

According to Texas Instruments, ADAS technology enables cars to take actions similar to a driver—sensing weather conditions or detecting objects on the road—and make decisions in real time to improve safety. ADAS features can include automatic emergency braking, driver monitoring, forward collision warning, and adaptive cruise control. Collectively, this technology has the potential to prevent as many as 2.7 million collisions a year in the U.S. alone.

ADAS makes travel safer and easier for millions of car owners worldwide. The central ADAS challenge lies in replicating a human driver’s ability to interpret visual data. A dilemma quickly arises, however, as obtaining better data generally requires more and more sensors—until the vehicle’s microprocessors simply cannot integrate all the data in realtime.

Kyocera, a broad-based technology company in Japan, is applying convergence, miniaturization, and solid-state conversion for multifunctional sensors that can simplify ADAS design and help contain processing requirements. The company’s latest ADAS innovations represent an integration of technologies in advanced materials, components, devices, and communications infrastructure.

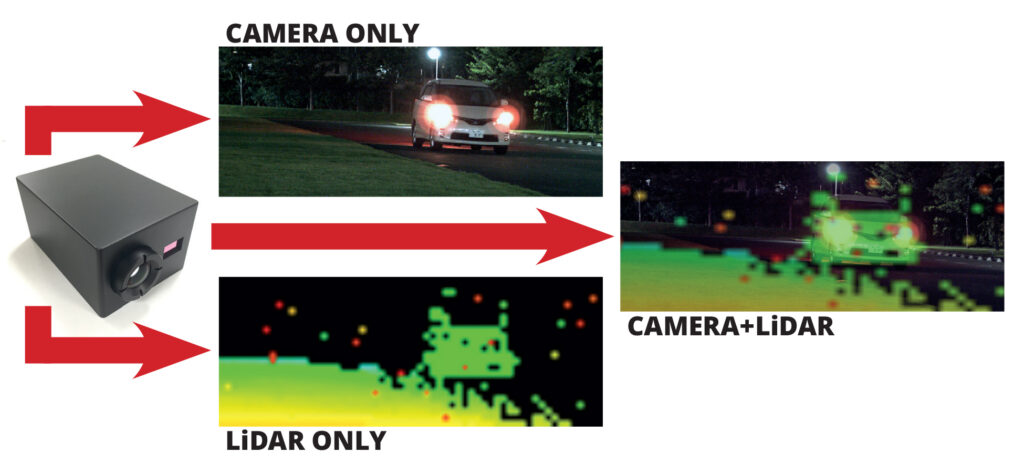

When discussing visual data acquisition, the immediate thought goes to cameras and lenses or LiDAR (light detection and ranging). Cameras are ideal for detecting the color and shape of an object; LiDAR excels in measuring distance and creating highly accurate three-dimensional images. Because cameras and LiDAR each offer unique benefits, they are often employed in combination.

However, digital imaging from two units that don’t share the same optical axis exhibits a deviation error known as parallax. A computer can theoretically integrate two data channels to correct parallax error, but the resulting time lag creates an obstacle to any application requiring highly accurate, realtime information, such as driving.

Kyocera’s approach is to use a common lens for both camera and LiDAR sensor, combined into one unit. Its patented Camera-LiDAR Fusion Sensor combines a camera with LiDAR to provide highly accurate images in realtime. With a single unit, the process of integrating camera and LiDAR data is greatly simplified, allowing timely and accurate object detection with no delay to improve driving safety. And, because both devices use the same lens, the camera and LiDAR signals have identical optical axes, resulting in high-resolution 3D images with no parallax deviation.

While other LiDAR systems have used a motor to continuously rotate a mirror as they scan an image, mechanical assemblies introduce reliability issues amid the shock and vibration of normal driving. Kyocera has developed a solid-state solution using a MEMS (micro-electromechanical system) mirror that eliminates the conventional rotating assembly. It is based on advanced ceramic technology Kyocera developed.

While currently in R&D, Kyocera’s Camera-LiDAR Fusion Sensor is targeted for release by March 2025. It is expected to be used both in vehicles and in various other fields, such as construction, robotics, industrial equipment, and security systems that can recognize people and objects.

Another technology that has grown in use alongside LiDAR is RADAR (RAdio detection and ranging). Radar, developed during the early 1940s, is based on the same concept as LiDAR but uses radio waves instead of light to measure distance, speed, and angle by calculating time and frequency differences in the returning signal. One key feature of radar is that its accuracy is not easily degraded by weather or backlighting.

Texas Instruments has a complete radar system on a single semiconductor chip. The mmWave radar sensor chip, a high-frequency radar sensor the size of a coin, has resolution that can detect the presence of an obstacle, and can recognize the difference between a car and a pedestrian. By combining this with an integrated DSP (digital signal processor), the technology enables machine vision algorithms for object classification to be run directly on the chip itself. This integration simplifies the task of the ADAS manufacturer and helps create a system with low power consumption, small enough to fit in a rear bumper, and at a nominal cost.

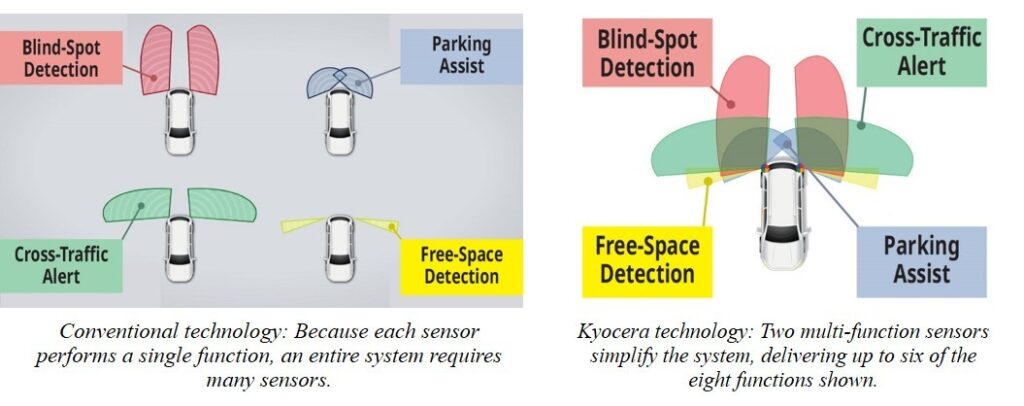

Because millimeter-wave radar is widely used in ADAS applications, many vehicle platforms incorporate multiple radar sensors for various purposes. For example, individual sensors are commonly installed for front view, blind-spot, collision detection, parking-assist, exit detection, and/or fully automatic parking, among others. As a result, ADAS system complexity and processing requirements are continually rising.

Kyocera has developed a multifunctional millimeter-wave radar technology that can detect multiple objects at different distances and directions using a single millimeter-wave sensor rather than a more complex array or multiple sensors. With Kyocera’s millimeter-wave technology, functions currently requiring multiple sensors can be integrated through high-speed switching into a single radar module. This allows the total number of radar sensors to be reduced, contributing to lower cost and simpler design.

By using the 79GHz band, Kyocera has succeeded in miniaturizing antenna volume and area, resulting in a compact module form factor measuring just 48 × 59 × 21mm (1.9 x 2.3 x 0.8 inches). Although small, the unit is highly accurate. Millimeter-wave radar range accuracy depends on the bandwidth of the frequencies used. Kyocera’s Multifunctional Millimeter-Wave Radar Module takes full advantage of its high bandwidth—77GHz to 81GHz, with 4GHz width. As a result, it can deliver highly precise distance measurements, accurate to within just 5cm (about 2”).

In general, conventional millimeter-wave radar senses the same spot at regular intervals (for example, 50ms, 100ms, etc.). In contrast, Kyocera’s high-speed switching allows for simultaneous sensing of multiple areas. As a result, a single module can offer blind-spot detection, free-space detection, and/or other sensing functions simultaneously. In principle, modules providing three different sensing functions could allow a system of 12 single-function sensors to be replaced with a future system of just four multifunctional modules.

Kyocera’s millimeter-wave radar innovation takes advantage of “adaptive-array” antenna technology, which the company developed for commercial telecommunications network infrastructure. This “beam-forming,” transmit and receive technology allows fast and accurate estimation of the receive angle of multiple signals, and arbitrary setting of both transmit and receive timing. As a result, mutual interference with other millimeter-wave radar signals can be reduced or eliminated.

Kyocera is currently developing a Multifunctional Millimeter-Wave Radar Module that utilizes 60GHz radar in addition to conventional 77GHz and 79GHz radar. Since the 60GHz band can be used both indoors and in vehicles, it is expected to have a variety of applications. In addition to ADAS, this module will be able to detect children left in cars, analyze the vibration waveforms and spectra of industrial machinery, or detect the heartbeat and respiration of a sleeping patient in a medical institution or nursing facility.

The primary concern in the design of any ADAS system is safety. That’s why processing is critical. As vehicles grow smarter—connecting, communicating, monitoring, and making decisions that help prevent accidents—the computing power required to process the enormous amounts of data that make these advanced safety features possible has skyrocketed. And while radar on a chip makes the unit small enough to be employed anywhere, the computer power needed to release its full benefits is still away off.

Want to tweet about this article? Use hashtags #construction #IoT #sustainability #AI #5G #cloud #edge #futureofwork #infrastructure #digitaltransformation #machinelearning